3D-RE-GEN

3D Reconstruction of Indoor Scenes with a Generative Framework

3D-RE-GEN — Generates separable 3D ground aligned scenes from a single image

Overview

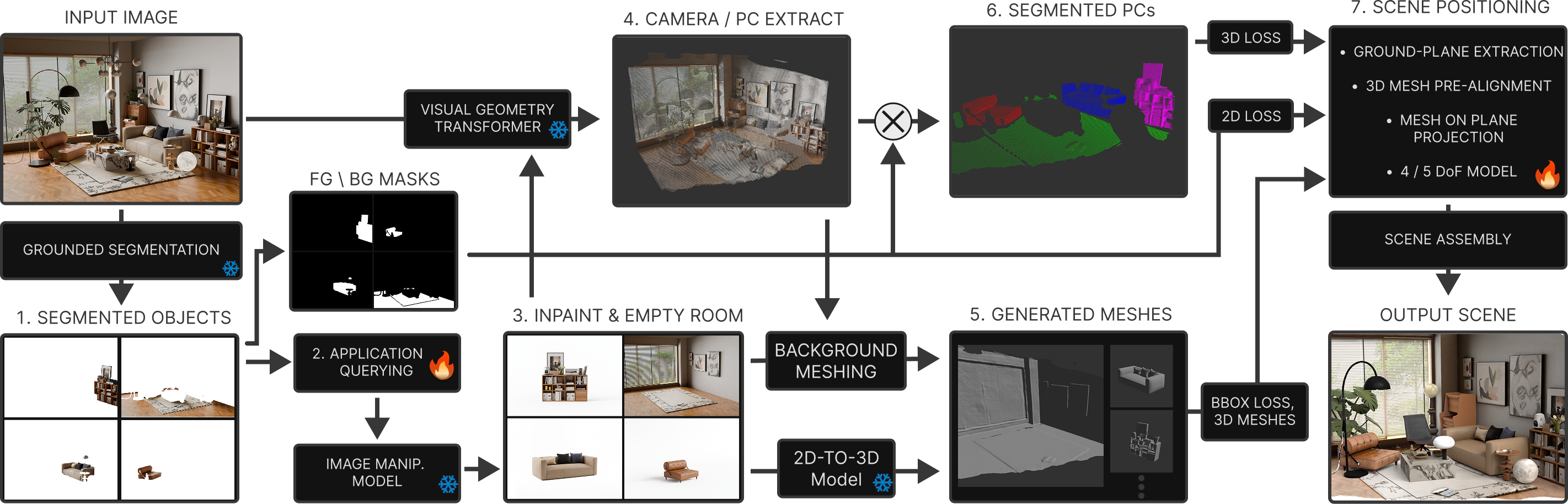

3D-RE-GEN transforms a single photo into a complete, editable 3D scene with individual objects and a reconstructed background. Our framework combines instance segmentation, context-aware inpainting, 2D-to-3D asset generation, and constrained optimization to produce physically plausible, production-ready scenes.

Pipeline

Our pipeline begins by segmenting objects in the input image. Application-Querying, a novel visual prompting technique, inpaints occluded parts using full scene context. We estimate camera parameters and reconstruct 3D point clouds, while each object is processed through a 2D-to-3D generative model to create textured assets. Finally, a differentiable renderer optimizes object positions using our 4-DoF ground alignment constraint, ensuring physically plausible scenes.

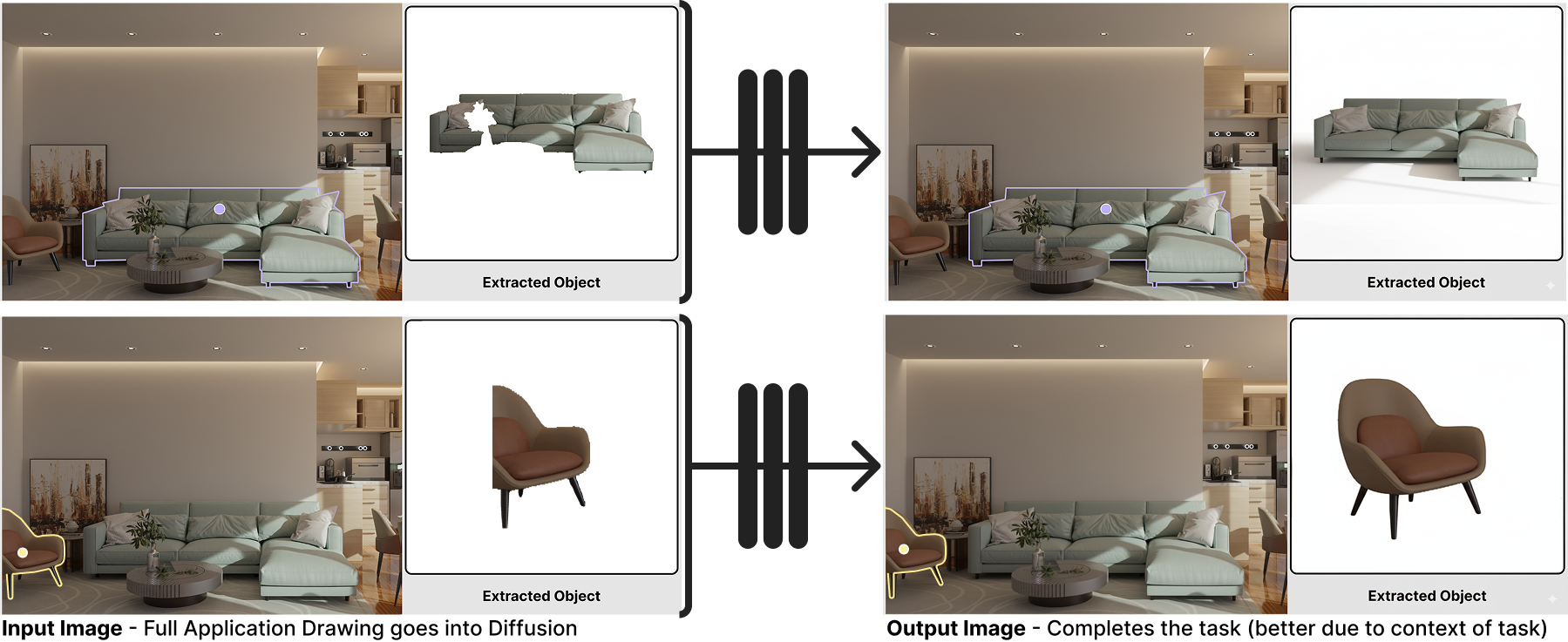

Application Querying — Application-Querying (A-Q) addresses the challenge of handling occluded objects by providing rich scene context to generative inpainting models. Rather than extracting only the occluded segment, A-Q constructs a composite query image structured like a user interface: one panel shows the full scene with the target object outlined, while the second displays the extracted segment on a white background. This structured visual prompting enables the model to leverage contextual cues like perspective, lighting, and surrounding style to complete missing parts with scene-aware details.

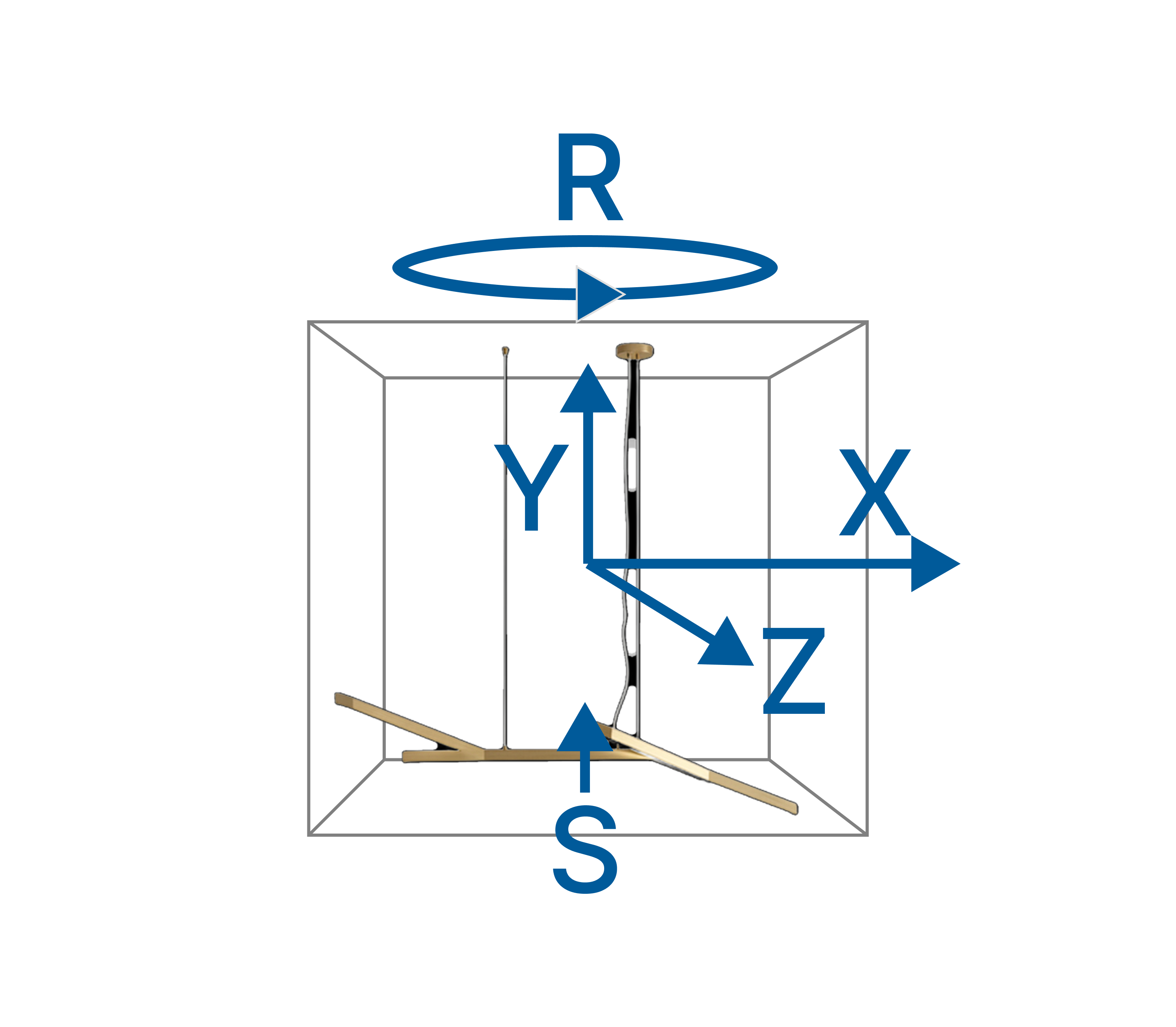

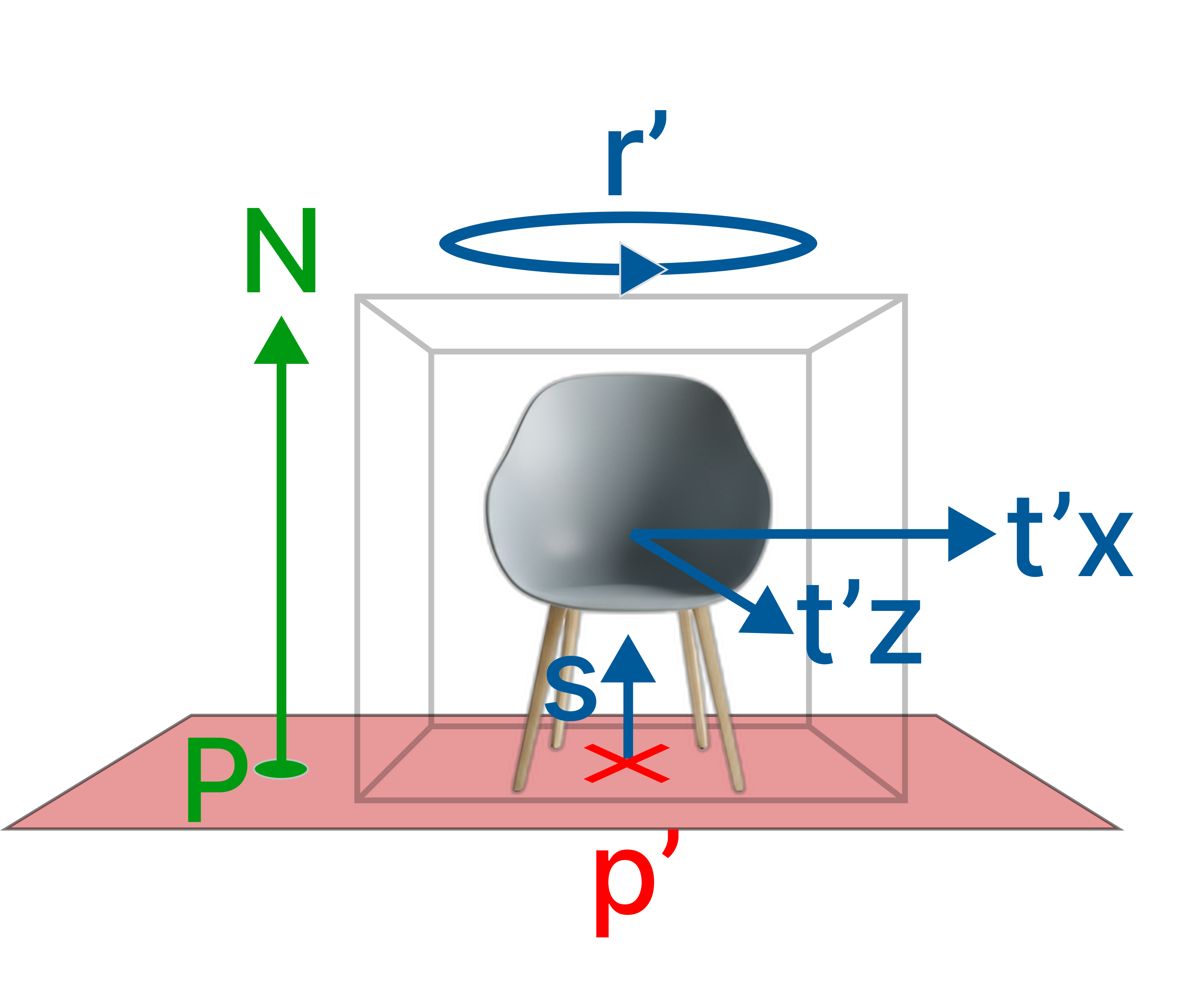

4-DoF Ground Alignment Optimization — To ensure physically plausible object placement, we introduce a novel 4-DoF (four degree of freedom) constrained optimization approach. While other methods typically use unconstrained 7-DoF transformations that allow objects to float or intersect unrealistically, our method transforms each ground-based object into a plane-local coordinate system derived from the fitted ground plane, constraining movement to four parameters: 2D translation (x, z), 1D yaw rotation, and 1D uniform scale. By restricting translation to the 2D floor surface (explicitly setting the y-component to zero in the local space), we enforce the critical physical constraint that objects must rest on the ground. This dimensionality reduction makes optimization highly robust and ensures all ground-based objects are correctly aligned with the floor plane.

.gif)

.gif)

.gif)

.gif)

.gif)

.gif)

.gif)

.gif)

.gif)

.gif)

Objects optimized using our 4-DoF model: furniture pieces are automatically aligned to the ground plane while optimizing scale, rotation, and position.

Results

Our evaluation demonstrates that 3D-RE-GEN consistently outperforms existing methods across quantitative and qualitative metrics, generalizing robustly across synthetic, real-world, and outdoor scenes.

Comparisons — Comparisons across four scenes (001, 002, 004, 011). Each row shows left to right: DepR, MIDI, Ours. 3D-RE-GEN excels at recovering sharp object boundaries and generating coherent backgrounds, avoiding the mesh artifacts and object merging issues common in competing approaches.

Full Scenes (Ours + Background) — Our full reconstructions include complete 3D scenes with accurately reconstructed backgrounds. The background integrates naturally with placed objects, providing a complete environment suitable for shadow casting, realistic light bounces, and physics-based simulations in VFX and game pipelines.

Comparison to SAM3D — Meta's SAM3D represents a recent approach to single-image 3D scene reconstruction, allowing users to select objects from an image which are then recreated in 3D and placed into a scene with 7-DoF transformations. However, our comparison reveals several critical limitations: SAM3D currently cannot generate background geometry, leaving scenes incomplete and unsuitable for VFX or game integration. Objects frequently float above or sink below the ground plane due to lack of perspective-aware alignment, breaking physical plausibility. Additionally, reconstructed objects often interpenetrate each other, as the method lacks geometric constraints to prevent collisions. In contrast, our 4-DoF ground alignment ensures objects rest correctly on the floor, while our complete pipeline reconstructs both objects and background, producing physically coherent, production-ready scenes.

Ablations

Ablation studies validate the importance of our key components: the 4-DoF ground alignment constraint and Application-Querying inpainting technique.

Impact of 4-DoF Ground Alignment — Without the 4-DoF constraint, objects float or sink into the floor, breaking physical plausibility. Metrics degrade significantly: Chamfer Distance increases to 0.030, F-Score drops to 0.68, and IoU falls to 0.51. The 4-DoF constraint is essential for producing physically plausible scenes ready for simulation and animation.

Impact of Application-Querying — Removing Application-Querying yields incomplete objects lacking scene awareness. Background quality degrades, and assets lose context-aware details. Without A-Q, 2D metrics (SSIM) drop from 0.54 to 0.27, and perceptual metrics (LPIPS) worsen from 0.34 to 0.66. This validates that structured visual prompting is critical for high-quality, context-aware object recovery.

Citation

More Information

Open Positions

Interested in persuing a PhD in computer graphics?

Never miss an update

Join us on Twitter / X for the latest updates of our research group and more.

Recent Work